Interval Research Corporation: a 1990s PARC without a Xerox

Below are all organized and slightly edited quotes from the material listed in the Resources section.

Introduction

Founded in 1992 by Paul Allen, co-founder of Microsoft Corp., and David Liddle, a computer industry veteran with deep roots in research, Interval is a research setting seeking to define the issues, map out the concepts and create the technology that will be important in the future.

With its long-term resources, Interval pursues basic innovations in a number of early-stage technologies and seeks to foster industries around them -- sparking opportunity for entrepreneurs and highlighting a new approach to research.

Typical research areas at Interval include:

Signal computation

Digital entertainment systems

Field ethnography

Adaptive computational structures

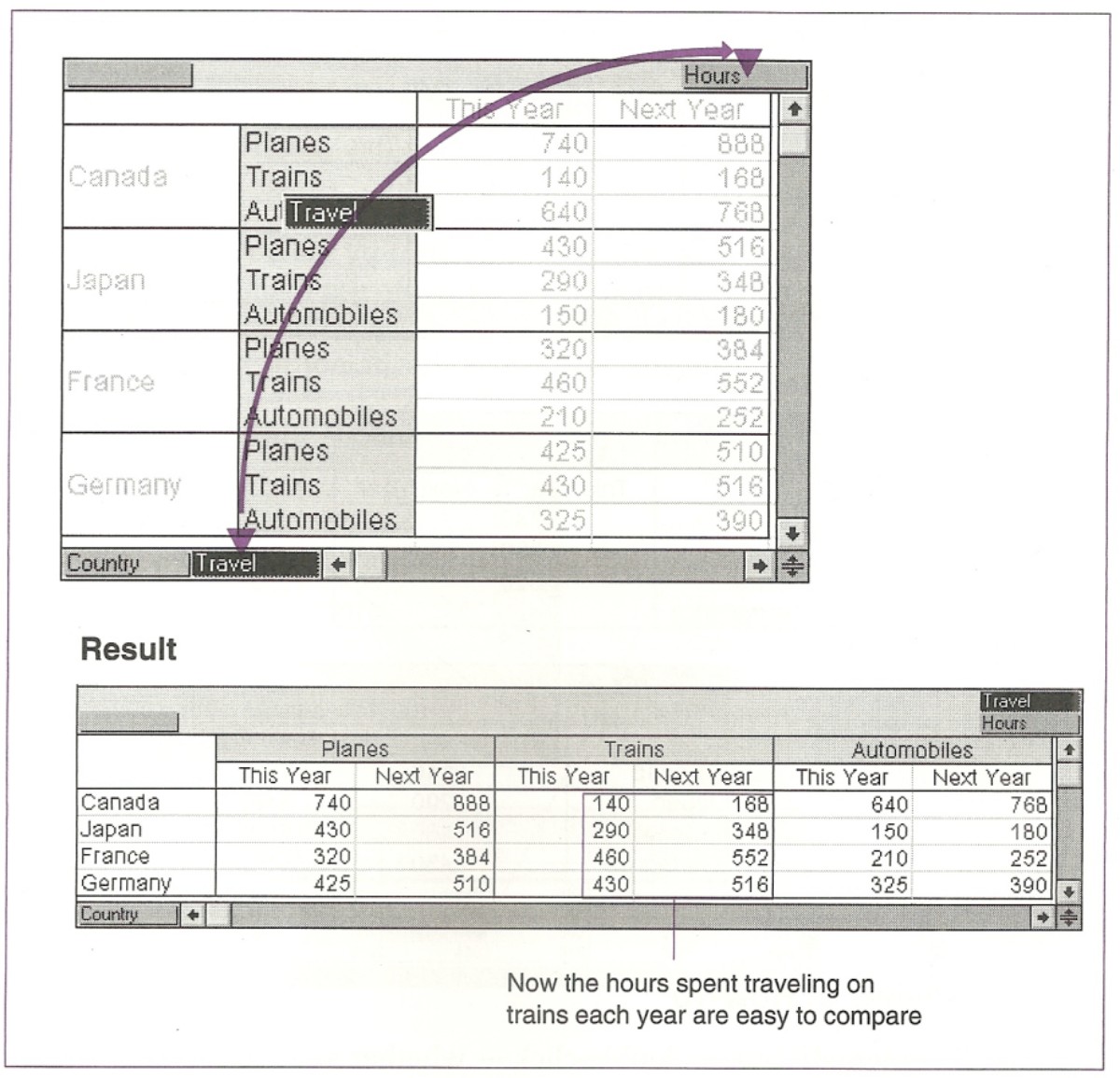

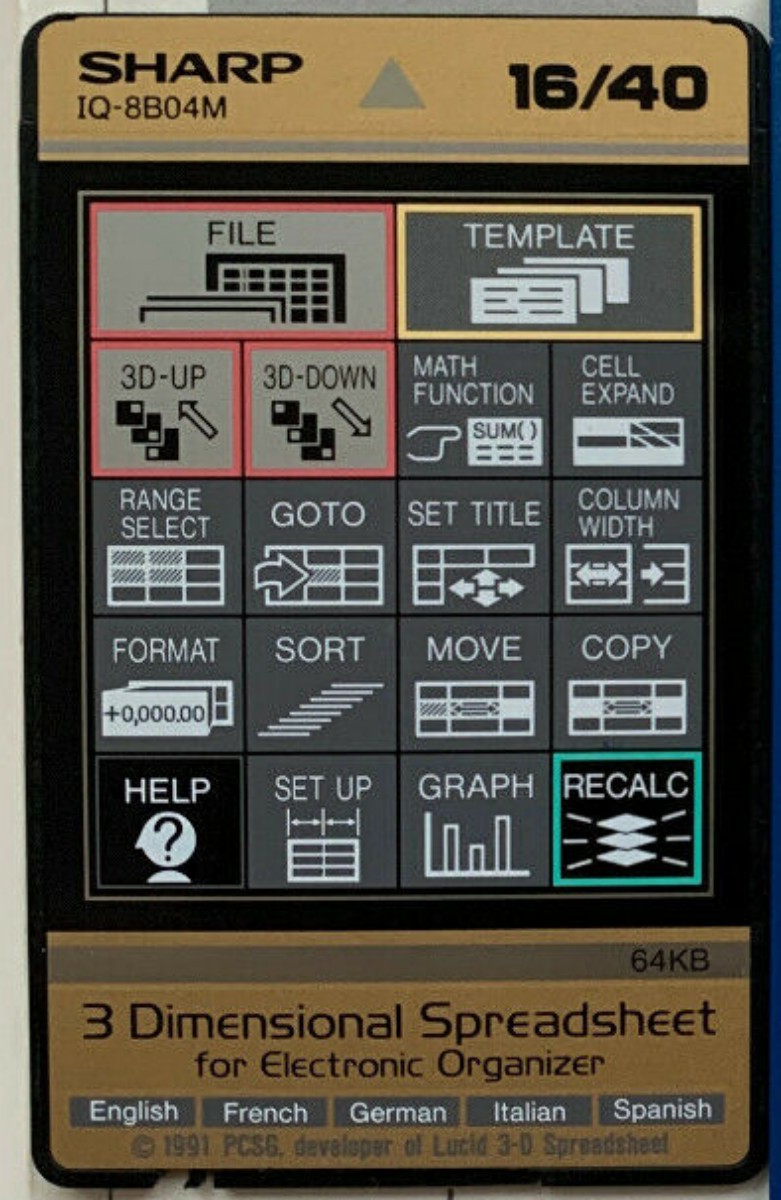

Portable and wearable information technology

Interactive entertainment

Wireless communication and sensing

Network cultures

Design

Technology and lifestyle choices

Immersive environments

On-line journalism

Experimental media

To bring a fresh and real-world perspective to creating these futures, Interval has gathered a broad range of people to make up its research staff, including film makers, designers, musicians, cognitive psychologists, artists, computer scientists, journalists, entrepreneurs, engineers and software developers. The company also collaborates with other research groups and university laboratories, including the Royal College of Art, the MIT Media Lab, the Santa Fe Institute and Stanford University and many others.

Technology will change the way we perceive our world. Interval will change the way people feel about technology.

Name

The name Interval refers to the space between, or the interregnum, dividing the old order from the new world yet to be born - a process Allen and Liddle originally thought would take a decade. To bridge this interval, Allen planned to fund the lab for a decade. The years 1992 to 2002 were even printed on company name-tags.

People

With 116 scientists and 54 staff members, Interval is not the largest research laboratory in Silicon Valley, where both Xerox and IBM have a major presence. But Interval has always had a special buzz and a collection of talent that, even in the annals of technological genius, stands out.

There are great inventors from PARC, Atari, and Apple, and there are a host of younger researchers plucked from Stanford and the MIT Media Lab. These people are famous - at least in the Valley - for inventing or developing key aspects of the PC revolution.

History

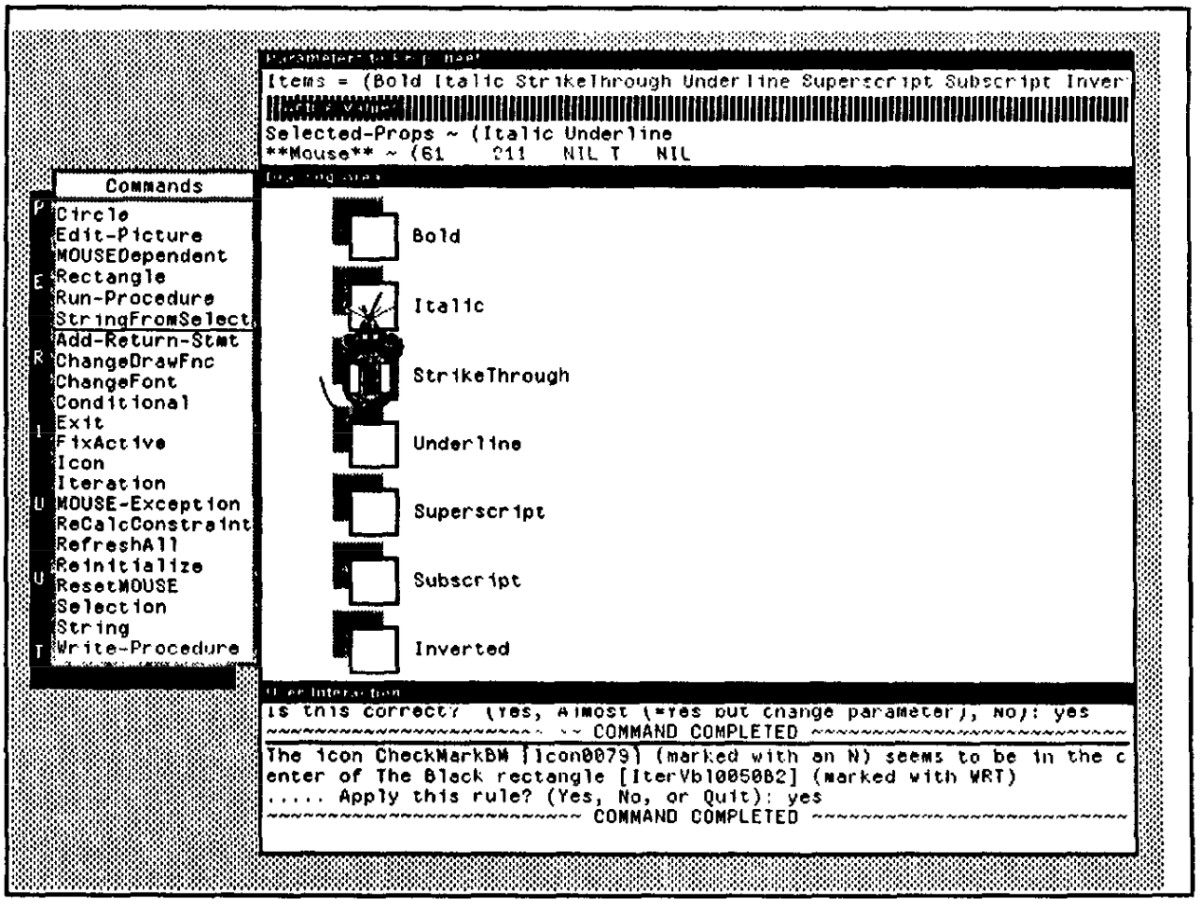

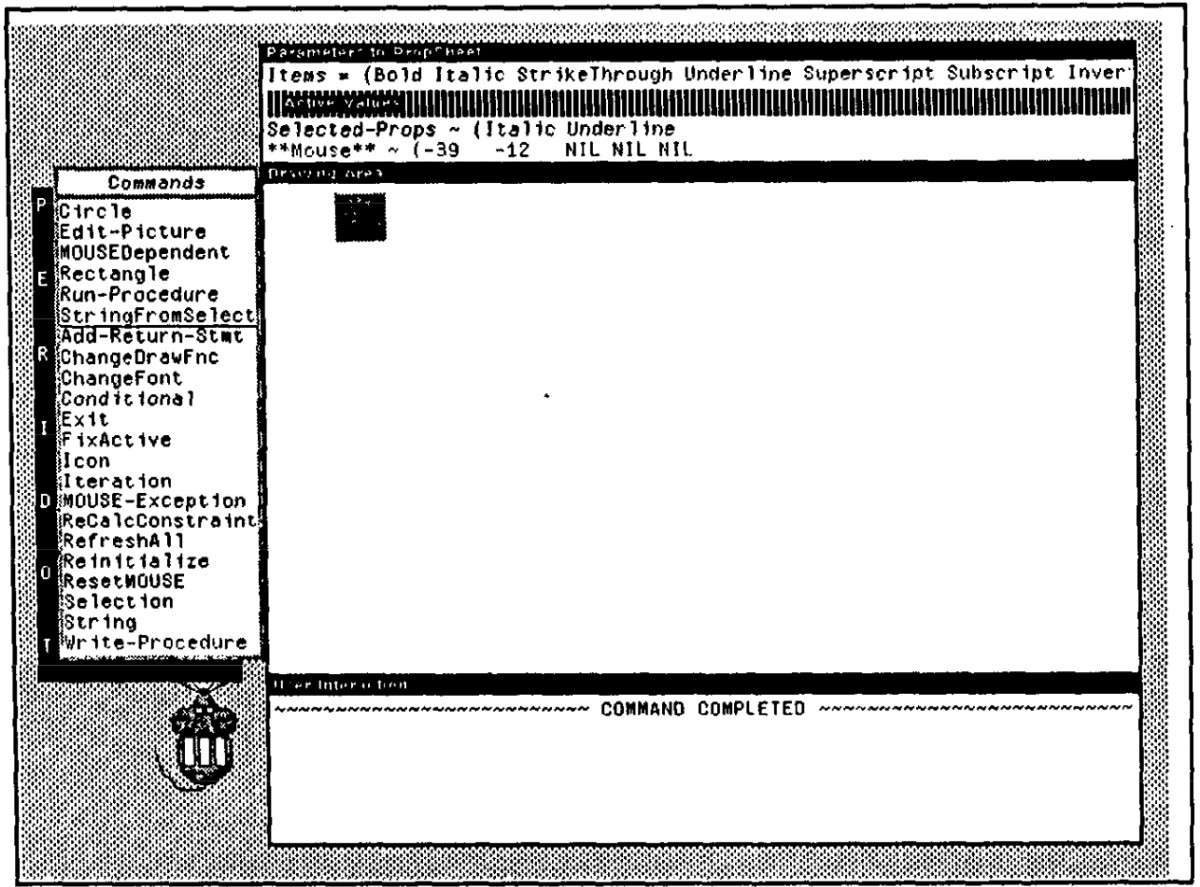

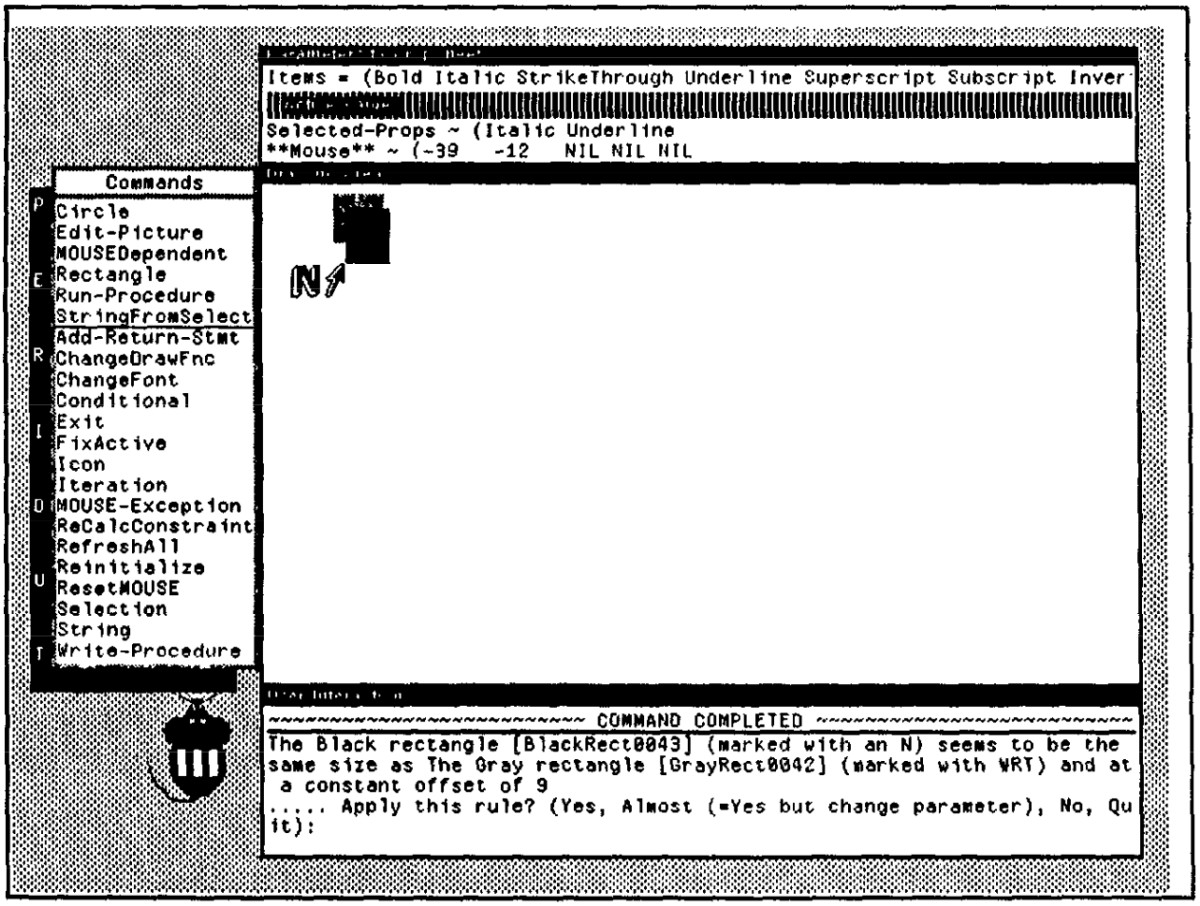

Liddle joined PARC in 1972. Soon he was running the Systems Development Division, formed to sell the Star, Xerox's first commercial workstation. Xerox introduced the first GUI with icons, the desktop metaphor, dialog boxes, object-oriented programming, the laser printer, and the Ethernet LAN - all the great inventions that made Apple, Microsoft, Adobe, 3Com, and other competitors fabulously wealthy. Why Xerox let its technology walk out the door is a long story. Suffice it to say that, for Liddle, the experience left a lasting impression; at Interval, things would be done differently.

Joining forces in '91, Allen and Liddle began staffing Interval with an all-star team of Silicon Valley players. It was Liddle's inspiration to organize Interval's brainpower around projects, with everyone expected to work on two or three of them at once. Today, researchers are still scattered throughout the building randomly, and everyone is encouraged to work together. This is accomplished by dividing time into points and giving everyone 20 to spend. A project will be budgeted for so many points, and a project leader will recruit fellow researchers by signing up teammates for primary (14 points), secondary (6 points), or lesser (3 points) commitments.

"When Interval grew to over a hundred researchers, David took the seven gray-haired staffers and said, 'Ye shall be area chairs,'" adds Johnson, who is one of these chairs (although she is blond). Interval's seven fields of research, currently scattered throughout areas codenamed Alpha, Bravo, Charlie, and so on, included computer graphics and image processing, new computer design, signal processing, audio research and wearable computing, human-computer interaction, market research, and electronic assembly in Interval's shops.

Collaborations

To bring a fresh and real-world perspective to creating the future, Interval has gathered a broad range of people to make up its research staff, including film makers, designers, musicians, cognitive psychologists, artists, computer scientists, journalists, entrepreneurs, engineers and software developers.

Additionally, Interval collaborates on an ongoing basis with other research groups, university laboratories and new media publishers, including the following:

Royal College of Art

Stanford Computer Science Department

MIT Media Lab

Workshops

The workshop's purpose is to encourage collaboration between different disciplines such as design, engineering, art, cognitive science, music, and communication in creating the products of the future. Interdisciplinary collaboration and teamwork is an essential ingredient in finding new design solutions, usable interfaces, and appealing products. Interval benefits by seeing alternative ideas about future directions for technology from students around the world.

Six to eight educational institutions receive the invitation to participate in the fall. Students work throughout the academic year on the "Design Challenge" which Interval proposes. Each participating institution selects one team to come to Palo Alto to take part in a week long workshop the following July in which students and faculty share their experiences and results.

1995 Design Challenge

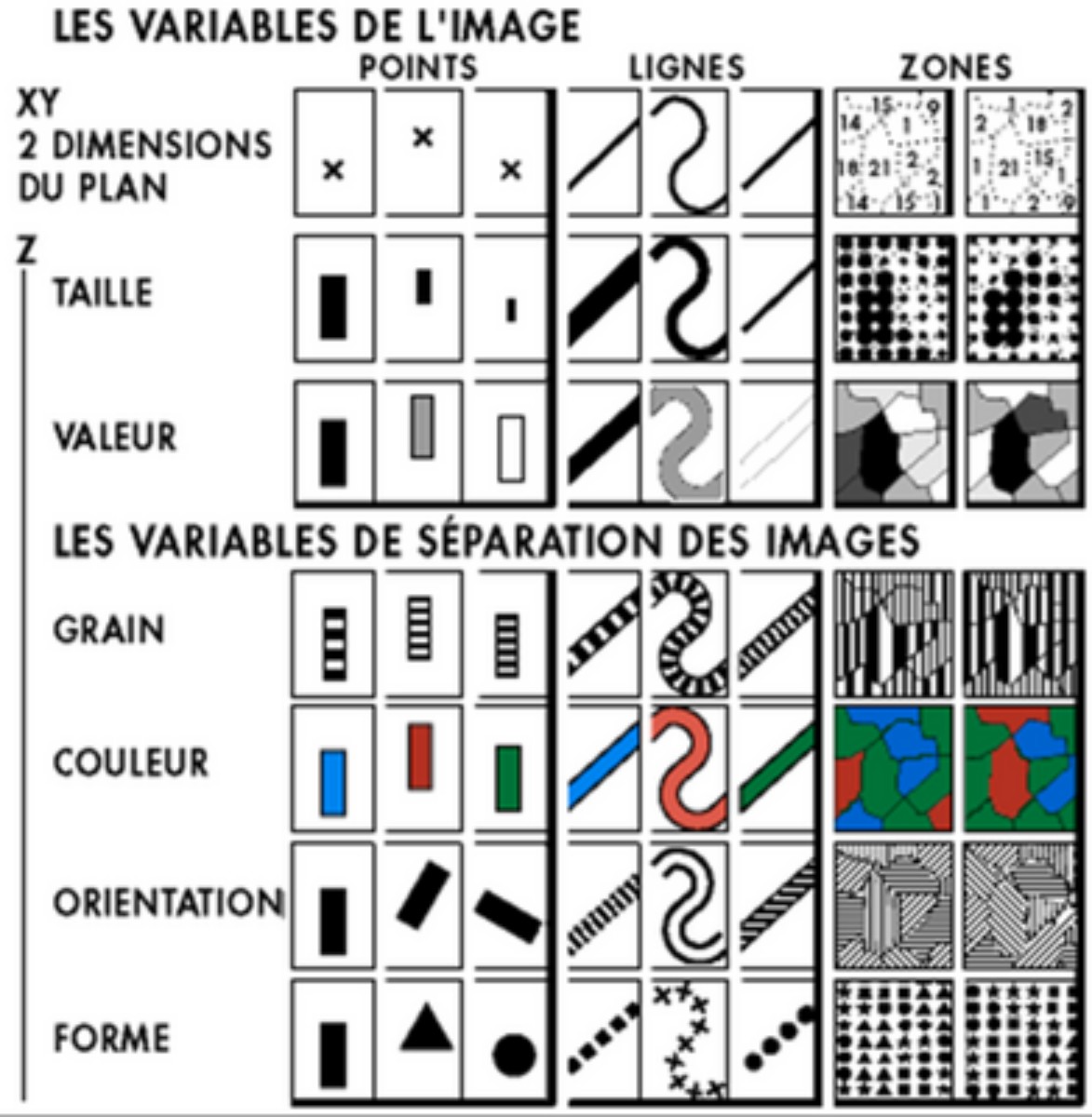

Sound, music and speech are often overlooked as essential and related data types in the use of multimedia. The visual metaphor for design has been the dominant focus of past work, leaving the sonic features and elements behind in the development of interface solutions. With this in mind, we asked the design teams to design new prototype tools and interfaces for sound, music or speech access. We asked that they design with the idea that the future of multimedia needs will be sonic just as much as it is currently visual.

1996 Design Challenge: "Remote Play"

University Workshop projects in 1996 focused on ways in which people can play together using computer-mediated objects and interfaces. All the projects were about some form of "remote play." Students defined for themselves what "remote play" might mean; each project took a different, and delightful direction.

Projects

Studying People

Members of Interval's Research Staff spend much of their time "in the field" talking to and studying people at home, work and play.

Electric Carnival at Lollapalooza: The Electric Carnival offered concert goers a sampling of 60 digital exhibits from an array of the most innovative artists, software developers, and visionaries working with technology today.

Placeholder: a Virtual Reality project which explored a new paradigm for narrative action in virtual environments.

Studying Techniques

One of our goals at Interval is to change the way people feel about technology. To bring a fresh and real-world perspective to creating the future, Interval has gathered a broad range of people to make up its research staff, including filmmakers, clothes designers, musicians, cognitive psychologists, artists, computer scientists, journalists, entrepreneurs and software developers. Typical areas of research for Interval include Home Media, Remote Presence, Wearable Technology, Personal Appliances and Music.

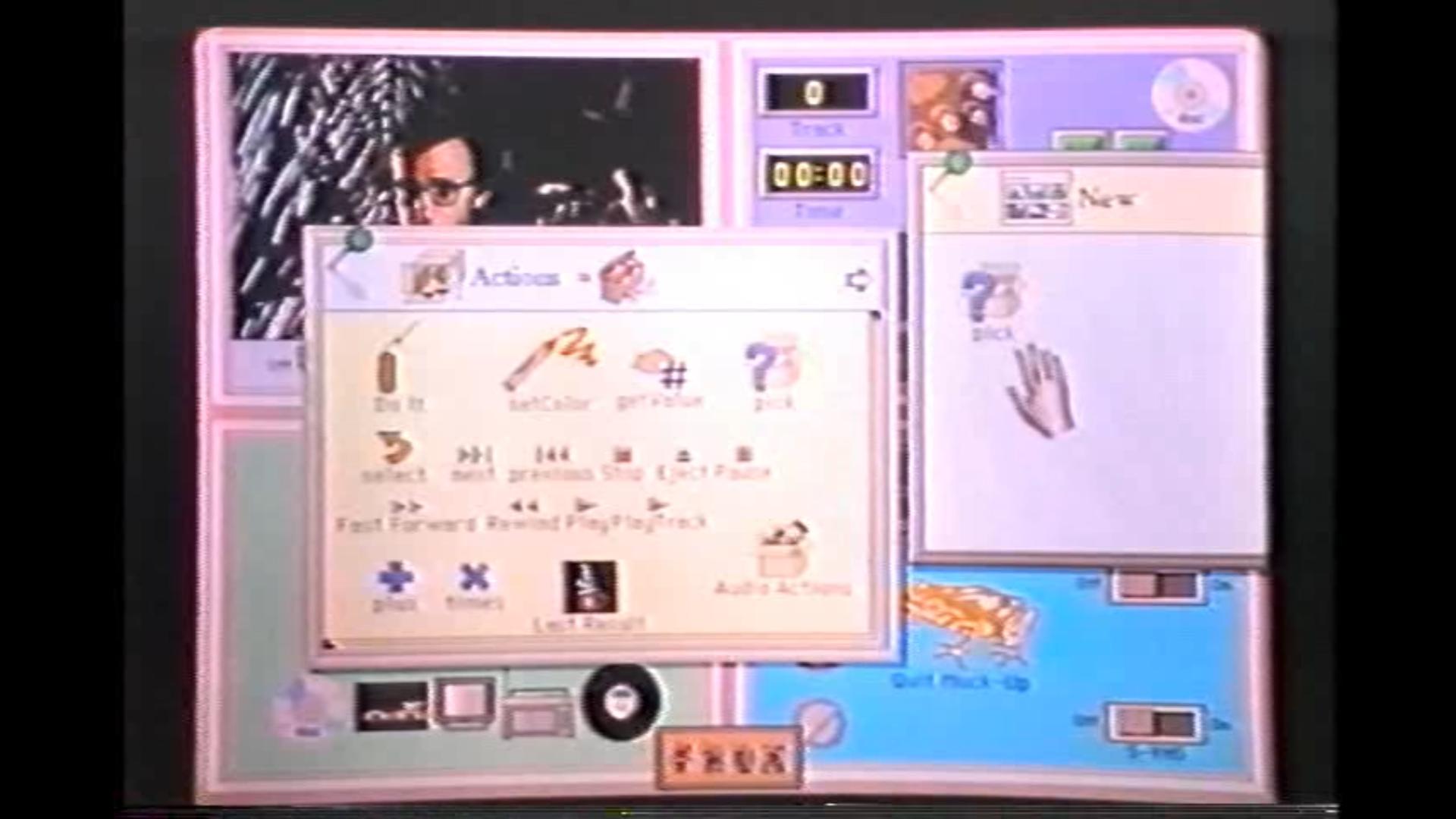

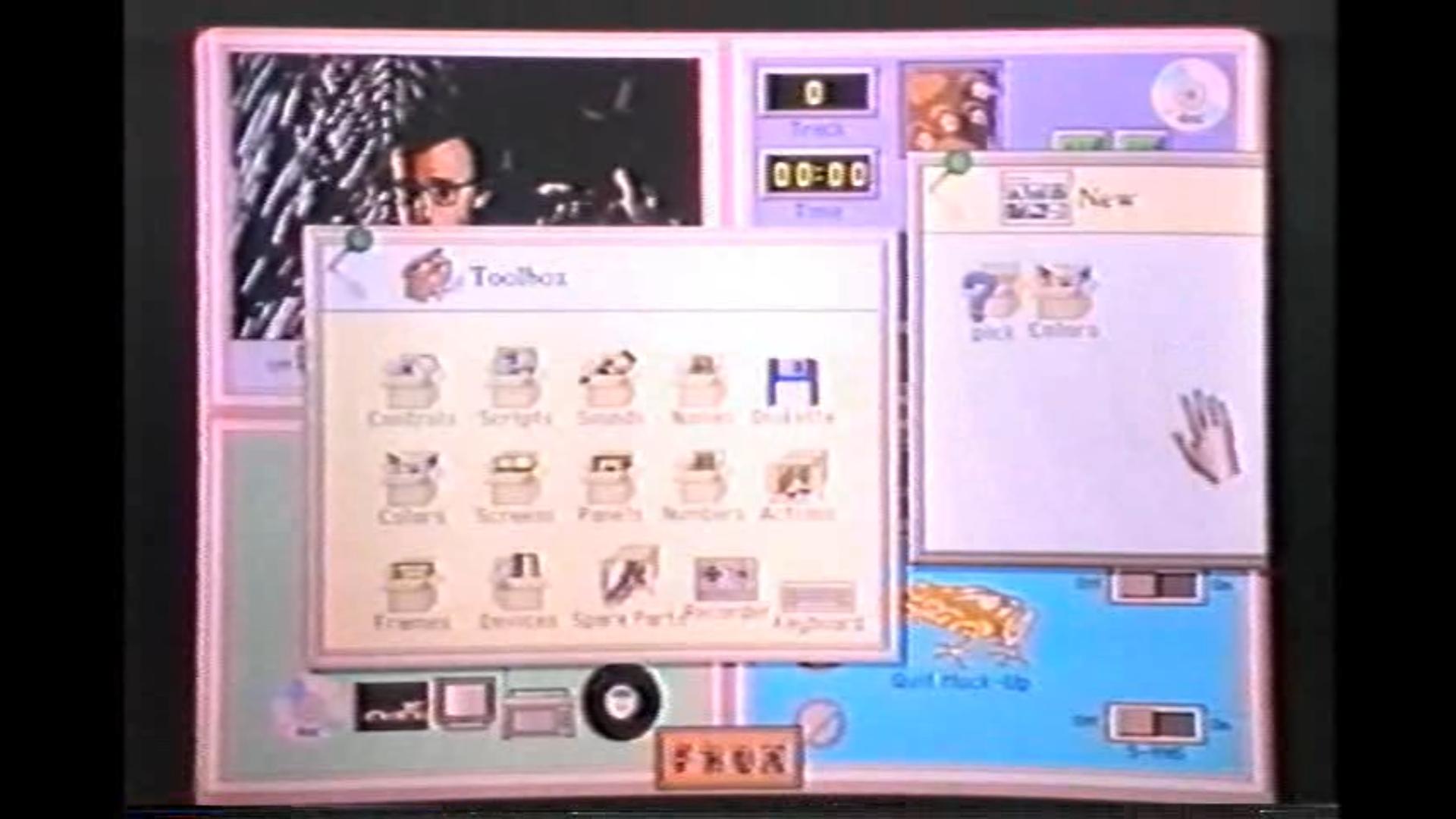

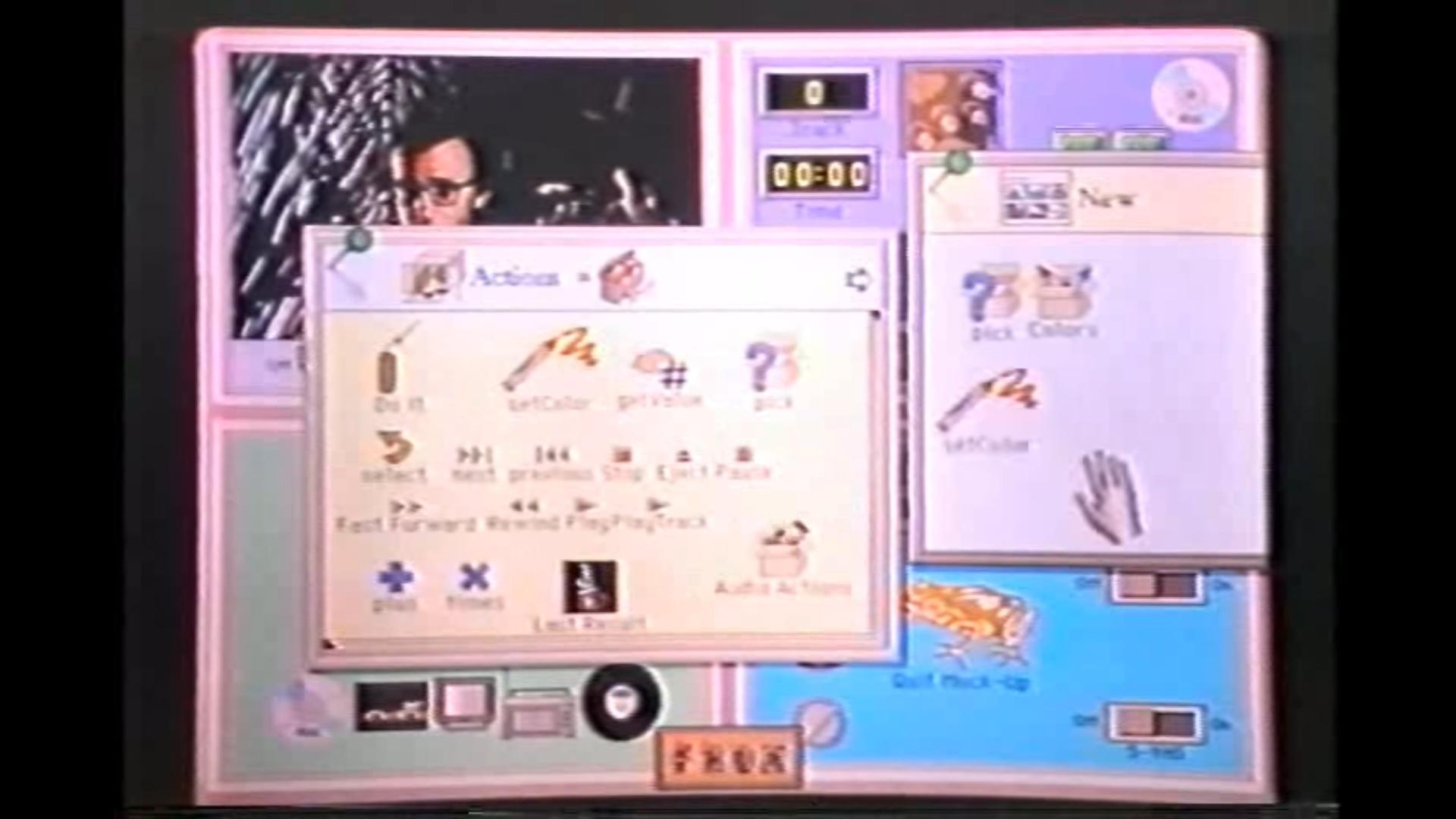

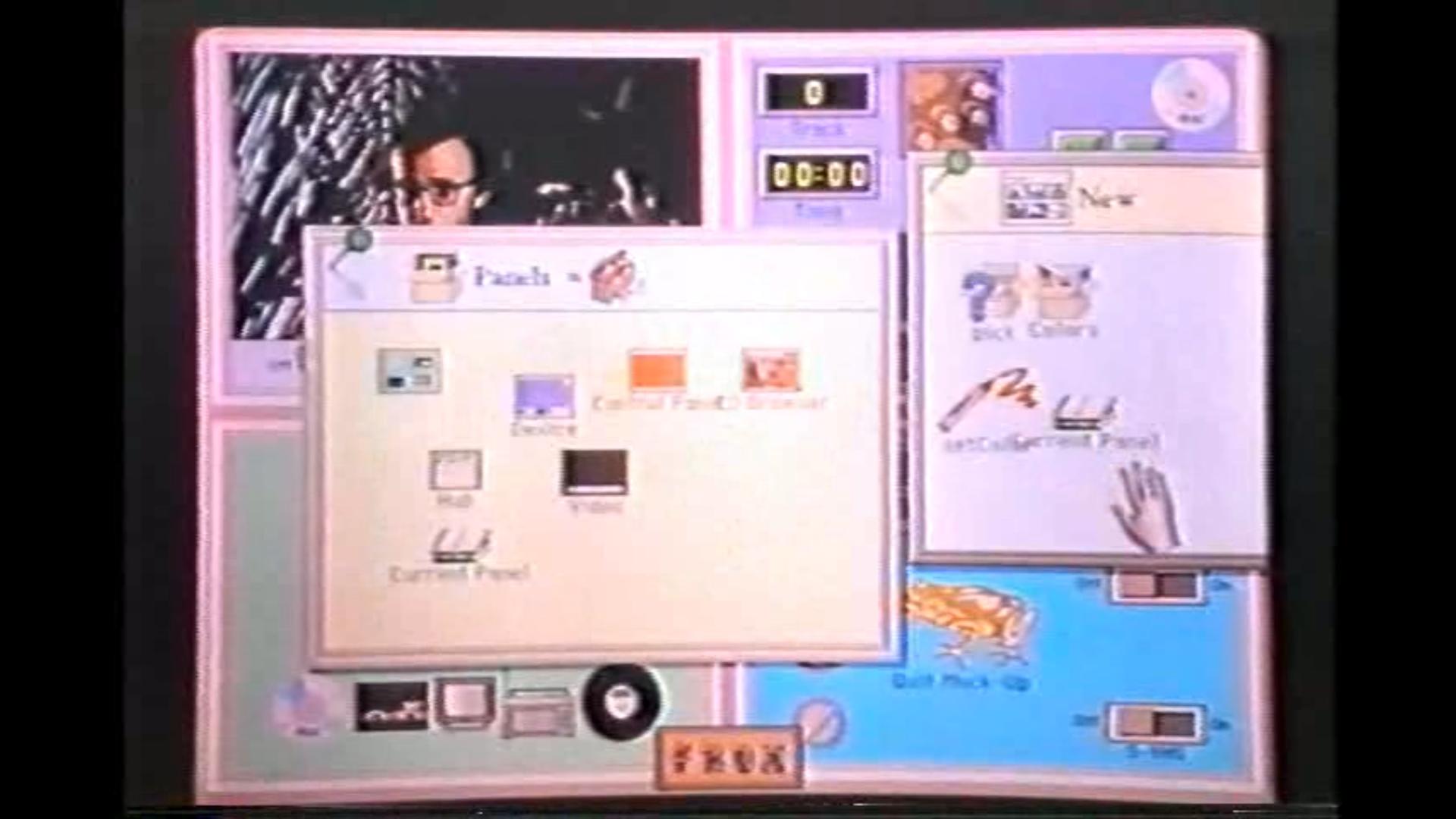

SoundScapes: enabling non-musicians to interact/play music in both a rich visual and sonic form using a standard desktop computer system. As part of this exploration, we designed a series of computer 'instruments' to be played alone or together over multiple networked sites by both musicians and non-musicians.

Enterprise: an experiment in journalism, developing a prototype of a business magazine on CD-ROM.

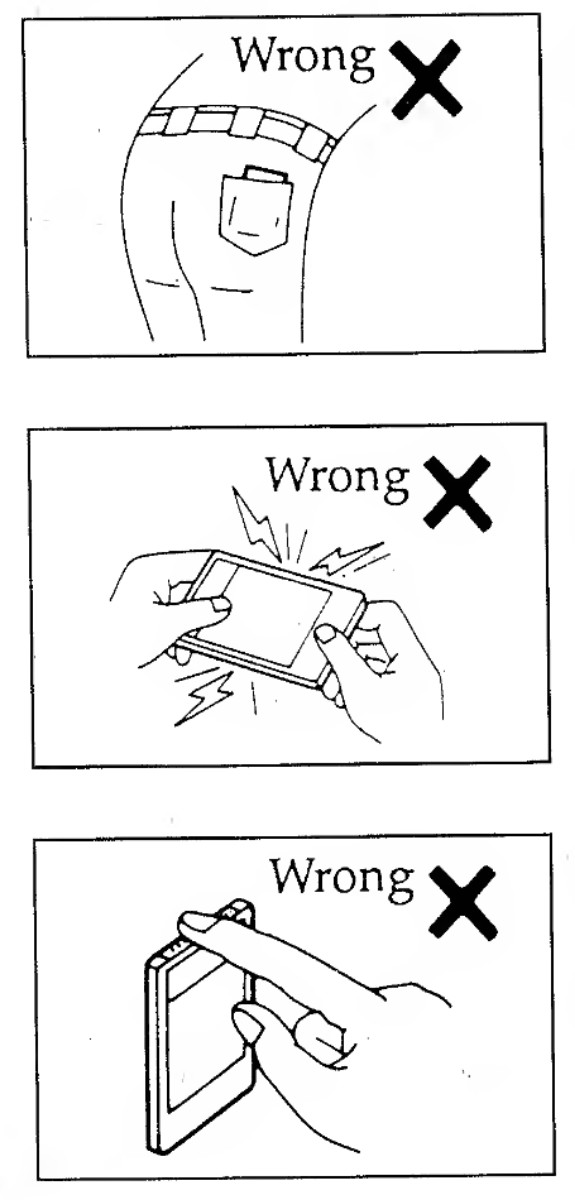

Wearables: a workshop at the Royal College of Art in London. The A'WEAR studio brought students in the industrial design and computer-related design departments together with their counterparts in the fashion & textile department for an intensive, five-day exploration.

Studying Technology

With its long-term resources, Interval pursues basic innovations in a number of pre-competitive technologies and seeks to foster industries around them.

Some of the technology areas we focus upon include Signal Processing, Computation, Representation, Adaptation, Display and Networking.

See Banff!: an interactive stereoscopic kinetoscope installation.

Be Now Here: an immersive virtual environment about landscape and public gathering places. It consists of a large 3D video projection, four-channel surround audio, a simple input device, and a 16-foot diameter rotating viewing platform on which the audience stands, which rotates once per minute in sync with the panoramic image and sound.

Signal Computation: our homage to the hundredth anniversary of Monet's cathedral paintings. Like Monet's series, our installation is a constellation of impressions, a document of moments and percepts played out over space and time. In our homage, we extend the scope of Monet's study to where he could not go, bringing forth his object of fascination from a hundred feet in the air and across a hundred years of history.

Final Days

After seven years of blue-sky exploration, Interval Research Corporation - the Palo Alto, California, think tank financed by Microsoft cofounder Paul Allen - is coming in for a landing. Open-ended research in information technology is the only life it has ever known, but now the lab is leaving behind the thin air of advanced ideas to work on creating marketable products.

This shift in direction rocked the lab all the way to the top in mid-September, when David Liddle, Interval's founding director and CEO, stepped down.

In a three-sentence statement his press office released, Paul Allen acknowledged Interval's "shift in focus" from pure research to product development.

"When Interval began, we just did cool things,"

"It was 100 percent research, 0 percent development."

Interval came to be revered as perhaps the sole surviving link to the great industrial research facilities of yore - the labs at IBM, AT&T, and Xerox PARC, which themselves have become increasingly commercial and product-driven.

Liddle, a veteran of PARC, strove to take the hard lessons of that famous institution - which managed to foster brilliant ideas but not profit from them - and reinvent the form.

An unusual hybrid between an industrial-research lab and a venture capital fund, Interval was conceived to live off the proceeds of its ideas.

It would seek out commercial applications without sacrificing creative leaps. Liddle has described this hybrid model as "a PARC without a Xerox."

Allen's original $100 million commitment to Interval has doubled by now (not including the money he spent last year to buy the Page Mill Road office complex that houses the lab).

So far, neither he nor the public has much to show for it: some art installations and videos, a touring tent full of computer games, a musical "stick" played by Laurie Anderson, and five spinoff Valley startups.

The lab has also been resolutely private. On the day it opened its doors, it closed them, wrapping itself in a cloud of secrecy.

"I've been visiting Interval since it opened," says Jim Crutchfield, a physicist at the Santa Fe Institute who has worked with Rob Shaw, "and I still have no idea what it does."

Liddle is forthright about what Allen and Interval have learned from their failures: "No more music, no more games."

Interval's history can be divided into three periods: In the early days, everything in Paul Allen's wired world was open to exploration. Then in the middle years, the lab went its own way and they saw less of Allen in Silicon Valley, except when he came down to play his guitar at the summer picnic. Now there's this new phase, coinciding with Allen's push into the cable industry. "For the first time," Bonnie Johnson says, "he has articulated broadly what he'd like to hear from us."

"There is a social contract here," he says. "We don't make as much money as we could working for a startup company. Nor do we get public recognition because our research is kept secret. In exchange, we get to work on stuff really at the edge. That's why we're here. This is a watershed moment for a lot of us. We wonder whether the new management understands this social contract."